How many robots does it take to screw in a light bulb? The answer: just one, assuming you’re talking about a new soft robotic gripper developed by engineers at the University of California San Diego.

The engineering team has designed and built a gripper that can pick up and manipulate objects without needing to see them or to be trained. The gripper is unique because it brings together three different capabilities. It can twist objects; it can sense objects, and it can build models of the objects it’s manipulating. This allows the gripper to operate in low light and low visibility conditions, for example.

The engineering team, led by Michael T. Tolley, a roboticist at the Jacobs School of Engineering at UC San Diego, presented the gripper at the International Conference on Intelligent Robots and Systems (or IROS) Sept 24 – 28 in Vancouver, Canada.

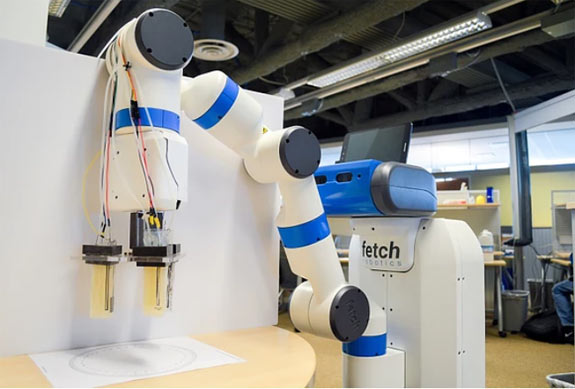

Researchers tested the gripper on an industrial Fetch Robotics robot and demonstrated that it could pick up, manipulate and model a wide range of objects, from light bulbs to screwdrivers. “We designed the device to mimic what happens when you reach into your pocket and feel for your keys,” said Tolley.

The gripper has three fingers. Each finger is made of three soft, flexible pneumatic chambers, which move when air pressure is applied. This gives the gripper more than one degree of freedom, so it can manipulate the objects it’s holding. For example, the gripper can turn screwdrivers, screw in light bulbs and even hold pieces of paper, thanks to this design.

Also, each finger is covered with a smart, sensing skin. The skin is made of silicone rubber, where sensors made of conducting carbon nanotubes are embedded. The sheets of rubber are then rolled up, sealed and slipped onto the flexible fingers to cover them like skin.

The conductivity of the nanotubes changes as the fingers flex, which allows the sensing skin to record and detect when the fingers are moving and coming into contact with an object. The data the sensors generate is transmitted to a control board, which puts the information together to create a 3D model of the object the gripper is manipulating. It’s a process similar to a CT scan, where 2D image slices add up to a 3D picture.

The breakthroughs were possible because of the team’s diverse expertise and their experience in the fields of soft robotics and manufacturing, Tolley said.

Next steps include adding machine learning and artificial intelligence to data processing so that the soft robotic gripper will be able to identify the objects it’s manipulating, rather than just model them. Researchers also are investigating using 3D printing for the gripper’s fingers to make them more durable.